Industrial bin-picking applications usually involve a vision system. These systems identify and localize the objects to be picked, and also often suggest an optimal picking strategy. In such situations, the robot moves from its original position to the defined grasp position of the object inside the bin (as defined by the vision system), grasps the object, and moves it to the final placing position. However this whole process raises some thorny questions:

How do we make sure that the robot will not collide with the environment during its movement? How do we know that the grasp position initialized by the vision system will lead to a successful grasp, especially given the uncertainties resulting from inappropriate lighting condition, camera calibration and uncertainties in the object pose estimation? After the object is grasped, how do we make sure that the object will maintain its pose in the gripper until it is placed?

Task 3.1 of the PICKPLACE project aims to provide solutions for collision free path planning of the robot, force-feedback grasping (reactive grasping) to improve grasping position after achieving first contact with the object to eventually monitor and improve grasp stability during robot movement.

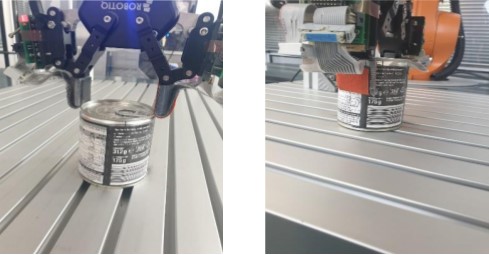

Figure 1: Reactive grasp example in x direction a) “haptic glance” of object with unstable grasp / b) re-grasp of object after correction, choosing correct grasp position

Robot motion planning

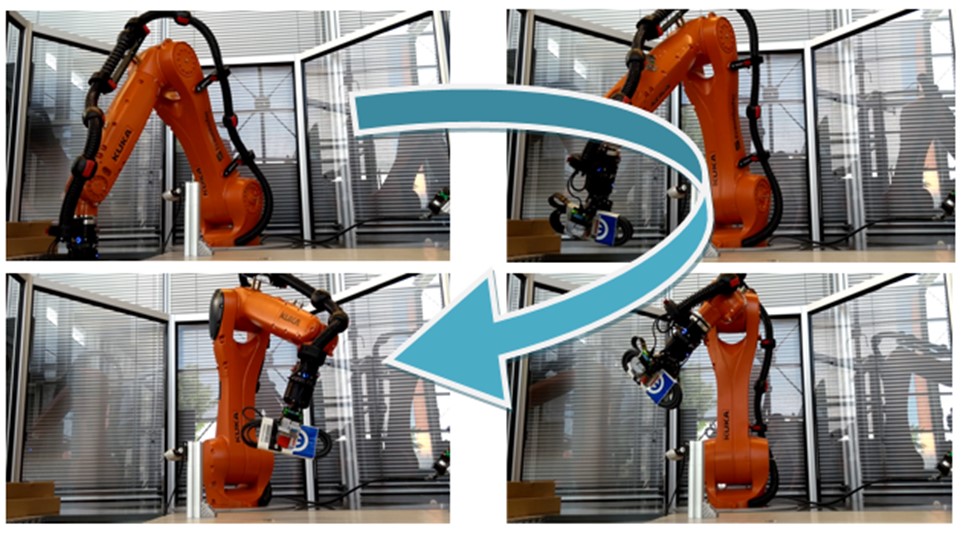

To plan the robot picking and placing path, we employed an open source, ROS-based framework that has been successfully used during the Amazon picking challenge, MoveIt.

MoveIt motion planning algorithms move the specified tool of the robot to the grasp position provided by the vision system while constantly checking for static or dynamic obstacles in the robot environment and updating the robot trajectory in real time accordingly. To inform MoveIt about the robot environment, the cell area was modelled using CAD-Software and converted to URDF-Format which can be processed by MoveIt. The configuration of MoveIt is easily transferable to other robots and environments by simply changing the URDF files of the robot and environment.

Figure 2: Example for Path Planning Algorithm

Reactive Grasping using tactile sensors

After the robot reaches the grasp position determined by the vision system and planned by MoveIt, the robot needs to be sure that its first contact with the object would lead to a successful grasp before MoveIt starts planning the transportation.

To achieve that, we designed a compliant piezoresistive tactile sensor with a 2 mm spatial resolution and mounted it on the contact areas of a 2-finger gripper used in the PICKPLACE project. During operation, the gripper detects the first contact with the object and, based on the haptic feedback, improves the grasp position. The high resolution of our sensors enables the algorithm to calculate the position of the contacted area of the object with respect to the gripper. Inspired by the human grasp, we assume that a stable grasp is achieved when the contact area of the gripper covers as much of the object’s surface as possible. Based on that grasping goal and the sensor configuration, we are able to calculate the necessary corrections to the position of the gripper relative to the part to insure that the gripper makes optimal contact with the object, resulting in a more stable grasp.

Due to the compliance of our tactile sensor, the sensor is physically deformed by the object it is grasping. After multiple grasps, these deformations can lead to false readings. We limit the impact of this issue by employing multiple filters and preforming down sampling techniques to the tactile data before the calculation of the correction position.

We tested our algorithm with various objects of different sizes, shapes and materials in challenging grasp positions and achieved success rate of 82%. This is quite an improvement compared to a non-adaptive grasp of the same position, which had a success rate of 20%.

Grasp Monitoring

After the grasp is improved, the object is transported to the placing position. However, during transportation, the object can move within the gripper. This occurs because of different factors including the object weight, collision with other objects in the bins, insufficient grasping force, etc. Therefore, we preform slip detection of the object during transportation. We use our force sensitive tactile sensor to detect signal fluctuations to determine movement of an object in the gripper during robot movement. The slip detection is based on evaluation of the difference between two consecutive signals and covariance function of two signals. Collisions with the grasped object while the robot was moving were executed on 8 different objects. The slip detection algorithms have a 99% reliability when collision forces are higher than 5N.